Explaining Predictions with SHAP

Using SHAP to interpret predictions from a classifier trained on the 20 Newsgroups dataset.

Introduction

This project demonstrates the use of SHAP (SHapley Additive exPlanations) to explain predictions made by a text classifier trained on a subset of the 20 Newsgroups dataset. By analyzing SHAP values, we uncover insights into the features (words) that contribute to the classifier’s predictions, including cases of correct classifications and misclassifications.

Methodology

Dataset and Preprocessing

- Dataset: The 20 Newsgroups dataset (Atheism vs. Christianity categories) was used.

- Preprocessing:

- Text was vectorized using TF-IDF to represent words numerically.

- Features were limited to the top 1,000 terms for efficiency.

Classification

- Model: A linear Support Vector Machine trained using SGDClassifier.

- Metrics: The classifier’s accuracy was evaluated using a confusion matrix.

SHAP Explanations

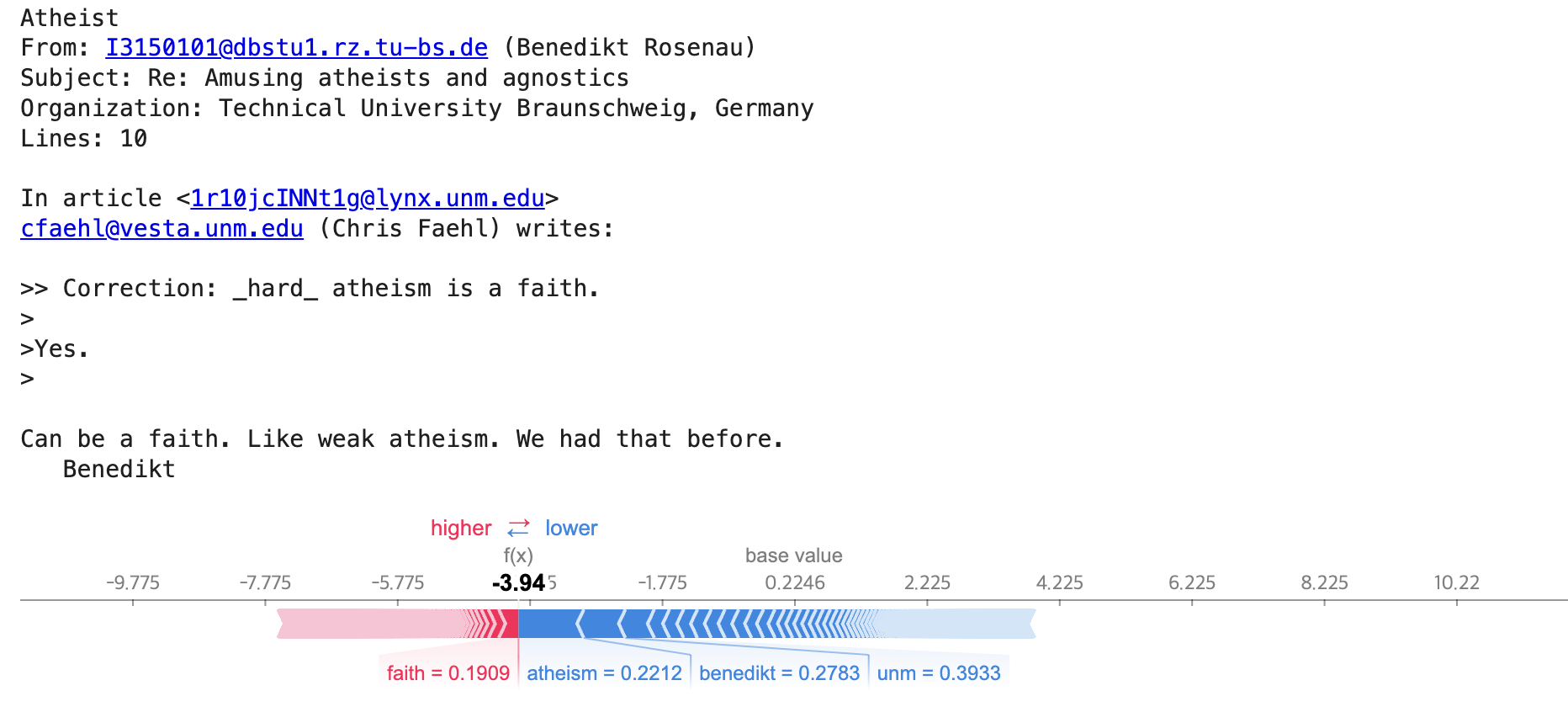

- Generated SHAP explanations for correctly and misclassified documents.

- Identified key features contributing to classification and misclassification.

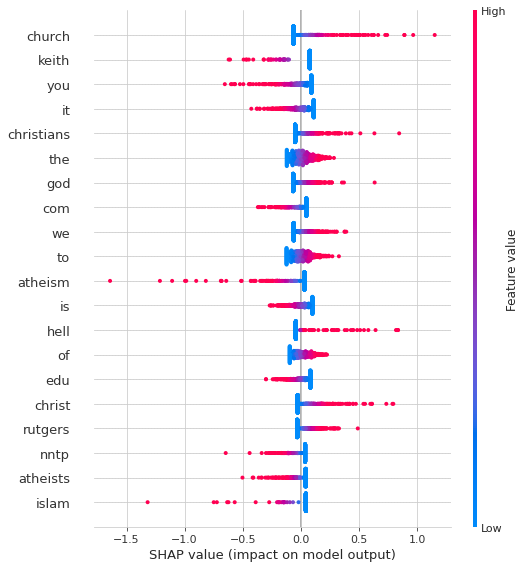

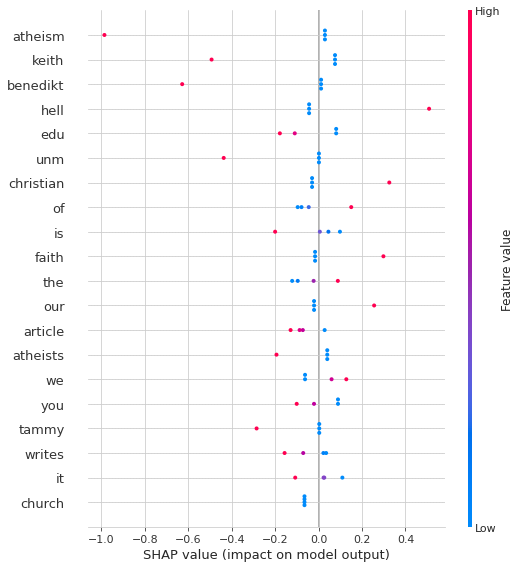

- Visualized SHAP values for individual documents and summarized feature importance across the dataset.

Results

SHAP Analysis

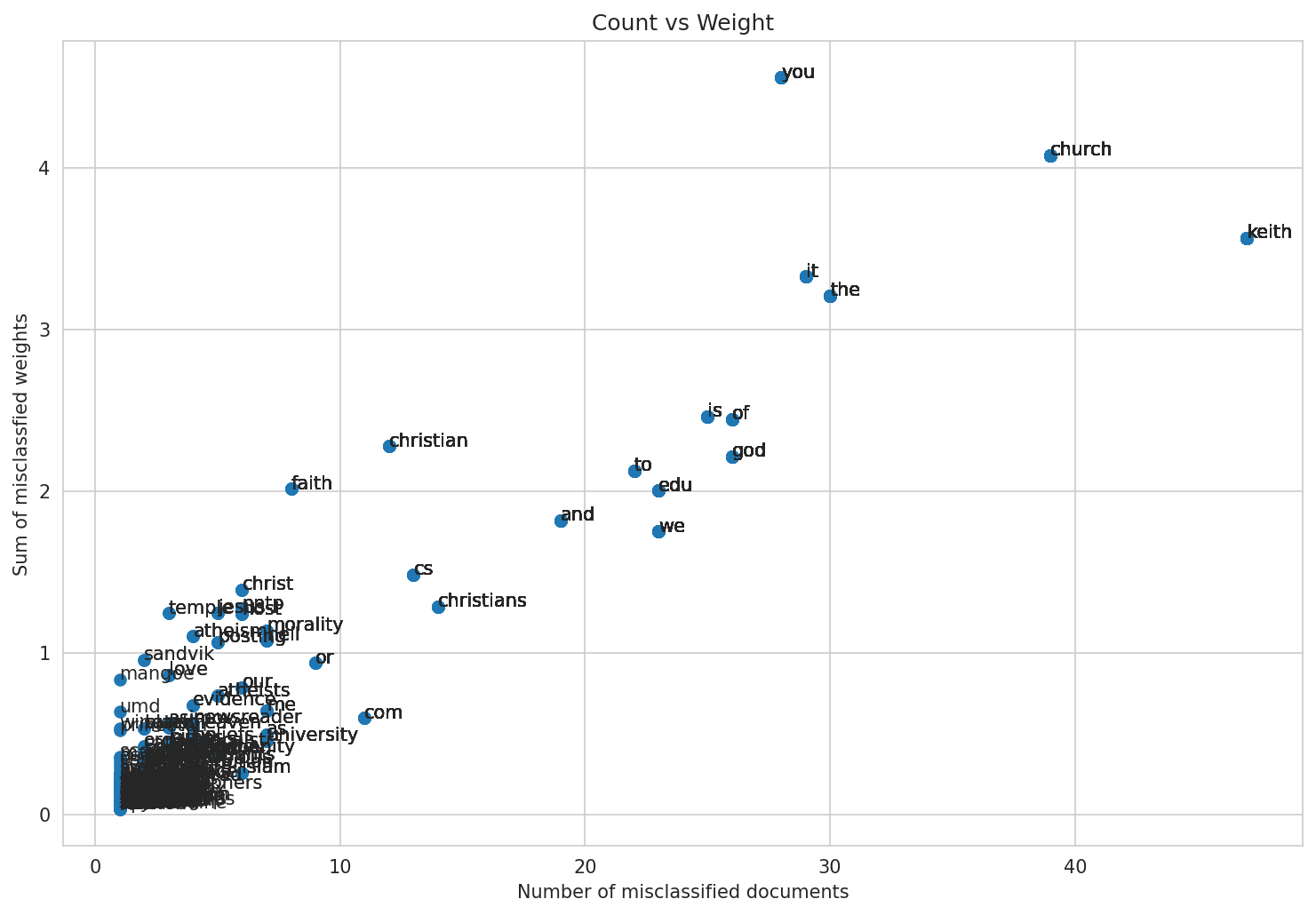

- Misclassified documents were analyzed using SHAP to identify features contributing to errors.

- SHAP summary plots revealed that domain-specific words like “faith” and “science” had significant impacts on misclassifications.

Feature Selection with SHAP

- SHAP values were used to identify the most important features for the model.

- A new model trained on selected features achieved an improved accuracy of 96.93%.

- Example: A document misclassified before feature selection was correctly classified after retraining the model on the selected features.

Notebook and Code

The full implementation is available in the Jupyter Notebook:

Future Work

- Extend SHAP explanations to multi-class classification tasks.

- Experiment with other datasets and classifiers to compare SHAP’s utility.

- Explore advanced feature selection methods informed by SHAP values.